Part V: The Abundance Institute AI and Post-Election Update

Tracking the Impact of AI on the 2024 U.S. Election

Welcome to the post-election installment of our four-part series on AI and the 2024 election. Part I was published on May 9, 2024, 180 days out from the November election. Part II was published on August 7th, 2024, 90 days out from the November election. Part III was published on September 6th, 2024, 60 days out from the November election. Part IV was published on October 7, 2024, 30 days out from the November election. For links to previous reports and our dataset visit aielectionobservatory.com

Introduction

The 2024 U.S. election has concluded. This was the first U.S. federal election since the mainstream popularization of generative AI. This technology democratizes access to tools capable of computationally creating realistic, high-quality audio, visual, and textual content. Many people expressed concerns that this technology was ripe for abuse, including to manipulate the electoral process. Our project collected approximately 42 weeks of data leading up to the election. Our analysis identifies no evidence that generative AI negatively affected the process or the outcome of the 2024 U.S. election.

Early in this cycle, scholars and pundits claimed that AI is going to transform our upcoming election, as one report puts it, in part by “democratizing disinformation,” enabling “anyone [to] become a political content creator and seek to sway voters or the media.” In a widely viewed video, “The AI Dilemma,” Aza Raskin, co-founder of The Center for Humane Technology, claims that due to AI, “2024 will be the last human election.” CNBC host Brian Sullivan said, “There are terrifying possibilities with AI if used improperly to a vulnerable public, and the massive quick spread from social media.” These narratives reinforced claims that 2024 was going to be the “Generative AI Election.”

Some continued to paint a dystopian picture right up until the final votes were cast. Lawrence Lessig released an Election Day TED Talk, How AI Could Hack Democracy, warning that AI could overwhelm democratic systems by exploiting human cognitive biases, amplifying polarization, and diminishing trust in institutions. Lessig presents AI as a kind of malevolent force, which he calls “The Thing,” that could destabilize democracy through disinformation and manipulation.

Our comprehensive analysis found these claims to be unfounded. From our tracking of over 46,000 articles and data from specific incidents, we see a public and media that critically engaged with, rather than passively accepted, AI-generated content and alleged use of this content. The media coverage surrounding AI incidents frequently included fact-checking and analysis, indicating that AI-driven misinformation did not escape scrutiny.

Our research provides data to back up late cycle and post-cycle opinion pieces such as “The year of the AI election that wasn’t,” and “it’s clear most of the fears about AI did not come to pass.” Below we discuss that data in depth.

The fears of AI destabilizing the 2024 US election did not materialize.

Although media coverage about AI steadily increased throughout the year, peaking during periods of heightened political activity, our analysis surfaced no widespread effects on voter views or behavior. Our analysis instead shows that a wide range of media sources contributed to the conversation about AI in elections, reaching both general audiences and more specialized, tech-focused readers. Additionally, the media focused on themes of misinformation, election integrity, and foreign influence. Our data suggests that while AI was indeed a central topic of concern, the media and public responded critically, signaling a capacity to question and analyze AI-generated content rather than simply accepting it at face value. Every incident in our media tracker that involved generative AI had a corresponding fact-checking campaign highlighted that AI was used in the creation of the content in question.

The rise of generative AI during this U.S. election cycle and its limited impact on the process and outcome together demonstrate the robustness of U.S. democratic institutions. Despite the alarming predictions, democratic resilience and public discernment remain strong. In other words, while AI can decrease the cost of creating misinformation, it has not rendered people incapable of critical engagement, nor has it dissolved the mechanisms through which democracies self-correct in the face of misinformation.

What we did and how

We began collecting news media mentions of AI and elections on January 21st, 2024. Our tracker covers only articles that are published by web sites and newspapers based in the United States. Our tracker selects articles that contain at least one key term from each of the following bullet points:

Misinformation, false information, fake news, disinformation, deception, impersonation, deepfake, synthetic media, AI-manipulated media, fake

Generative AI, AI-generated content, artificial intelligence, or robocall.

2024 election, presidential election, US election, election interference, voting integrity, election, democracy, Biden, Harris, Vance, Walz or Trump.

Using this criteria, we have amassed a database of approximately 46,000 news articles from a wide range of sources. The entire database is open to the public and can be downloaded here.

To find instances of AI-generated election content, we identified articles that had the same or similar title across multiple domain names and read every one of them. This clustering helped us identify incidents. If an article described an incident where generative AI was used to disseminate election-related information, we would include that article in our reports.

Update Since October 7th, 2024

Using the methodology above, below is our final update which began on October 8th, 2024 and covers the 30 day period before the election.

Fake FBI Videos Touting Election Fraud

An Atlantic Council investigation found that X accounts with no prior posting history posted inauthentic videos on November 4th and 5th. These videos pushed disinformation narratives alleging rigged votes, destroyed ballots, malfunctioning machines, and dead voters, suggesting that Kamala Harris had benefited from instances of election fraud. The videos were edited to appear as if they were created and disseminated by the FBI and national news outlets, showing an FBI watermark with familiar graphics of CBS and MSNBC.

The Council’s investigation suggested that the posts resembled those in the Russian disinformation campaign Operation Overlord.

The investigation states that the videos were not widely distributed, receiving fewer than 7,000 views.

Perplexity AI Election Information Hub

Shortly before the 2024 election, AI search engine Perplexity AI launched their Election Information Hub. The hub provides live updates and voter information. It uses live data from the Associated Press and Democracy Works to provide voter information such as polling requirements, locations, and times. Perplexity AI was the only major AI company to create tailored responses for election information. Other companies redirected their users to external sources.

The feature received some critical media coverage, including in a Verge article. One screen shot of the Hub shows a “Future Madam POTUS” candidate, under which there is information about Vice President Harris. The article also claimed that Perplexity’s platform “failed to mention that Robert F. Kennedy, Jr., who’s on the ballot where [the author] live[s], had dropped out of the race.” Perplexity updated the Election Hub after The Verge published their article.

Stolen Identity Attack on Tim Walz

On October 21, 2024, a video went viral on social media depicting a man falsely claiming to be Matthew Metro, a former student of Democratic vice-presidential nominee Tim Walz, and accusing Walz of inappropriate conduct during his time as a high school teacher. The real Matthew Metro, who currently resides in Hawaii, confirmed in an interview with The Washington Post that he had never met Walz and was shocked to see someone using his identity to make these false allegations. Metro did attend a Minnesota school where Walz once taught, but he quickly dismissed the video as a fabrication, pointing out distinct physical differences between himself and the man in the video.

The video was initially posted on X by an account impersonating Metro and garnered millions of views. Social media users quickly questioned the video's legitimacy using the platform’s “Community Notes” features noting discrepancies in the man’s appearance compared to photos of the real Metro. Several experts consulted by The Post concluded that the video was likely a “cheap fake” video of an actor impersonating Metro rather than a sophisticated AI-generated deepfake. The low video quality and apparent visual distortions suggested that while the video might have been edited and compressed, it did not use advanced AI manipulation.

The Dispatch reported that a different false claim against Walz was linked to Russian disinformation efforts and that the Metro impersonation was in line with that strategy..

Recap of Database and Past Reports

Past Reports and Incidents

Over ten months leading up to the 2024 U.S. election, we identified 19 incidents of varying impact. Nearly half did not involve generative AI, despite widespread assumptions of its use. Although AI was often mentioned in reporting about these instances, these mentions were unfounded and unnecessary. We included these instances because they were part of the public discourse on AI’s role in the 2024 election.

Our list includes:

Incidents Involving Generative AI:

DeSantis campaign using AI-generated images in video, June 5th, 2023.

Biden robocall in New Hampshire, January 22nd, 2024.

AI-generated images of Trump with black voters, March 4th, 2024.

Parody Kamala Harris campaign advertisement, July 26th, 2024.

Trump posts AI-generated images, August 18th, 2024.

AI-generated music videos containing political figures, August 21st, 2024.

Grok-2 images go viral on X, August 14th, 2024 to September 1st, 2024.

National Intelligence report on foreign influence operations using generative AI, September 6th, 2024.

Images related to comments made by Donald Trump about Springfield, Ohio, September 9th, 2024 - September 13th, 2024.

Fake image of Harris with Sean “Diddy” Combs, September 20th, 2024.

Senator Braun (R-Ind) uses digitally altered campaign advertisement, October 1st, 2024.

Incidents Not Involving Generative AI:

AI-generated image of Taylor Swift holding a “Trump won” flag, January 31st, 2024.

Biden “cheap fakes,” early June 2024. These images and videos were not AI-generated but were included in conversations about AI deepfake legislation.

DOJ fears of tampering with Biden-Hur audio clip, June 1st, 2024. This did not involve a specific instance of AI-generated political information but the DOJ refrained from releasing an audio clip based on fears that it could be altered by the public.

Russian-operated social media bot farm, July 9th, 2024.

Alleged AI manipulation of images relating to the Trump assassination attempt, July 13th, 2024.

Foreign influence operations pivot away from using AI, August 8th, 2024. The Microsoft report highlights that while foreign influence operations use generative AI, many actors have pivoted back to traditional methods such as mischaracterization of content, simple digital manipulations, and inclusion of trusted labels and logos.

Controversial images of Kamala Harris rally in Detroit, August 11th, 2024. Donald Trump accused Kamala Harris of using AI to create images depicting a large crowd at one of her rallies. There is no evidence that these images were AI-generated.

CNN broadcasts an altered photo of Donald Trump, September 13th, 2024.

To summarize, our previous four reports identified nineteen prominent incidents that involved the alleged use or actual use of generative AI to convey election-related information. Three of the nineteen instances used generative AI and were shared by a politician or their campaign. The remainder were shared by the broader public, media companies, foreign influence operations, or did not include generative AI content at all.

Selected Findings in the Data

Teasing out the relationship between communications content and popular effect is difficult even in the calmest of times – but the past 10 months have been anything but calm. Twelve of our nineteen identified instances occurred between July 2024 and September 2024. Some of the most unusual political events in decades also occurred during this time period: after a disastrous debate President Biden dropped out of the 2024 presidential race; Donald Trump survived an assassination attempt and a now famous photo was taken; and an incumbent Vice President took over the Democratic ticket. With the spotlight on these monumental events, AI faded into the background, relatively speaking.

Still, there was plenty of news about AI and elections. Our analysis reveals some interesting trends in the data that only stand out when one zooms back from the frantic deluge of political news stories that have characterized this campaign season.

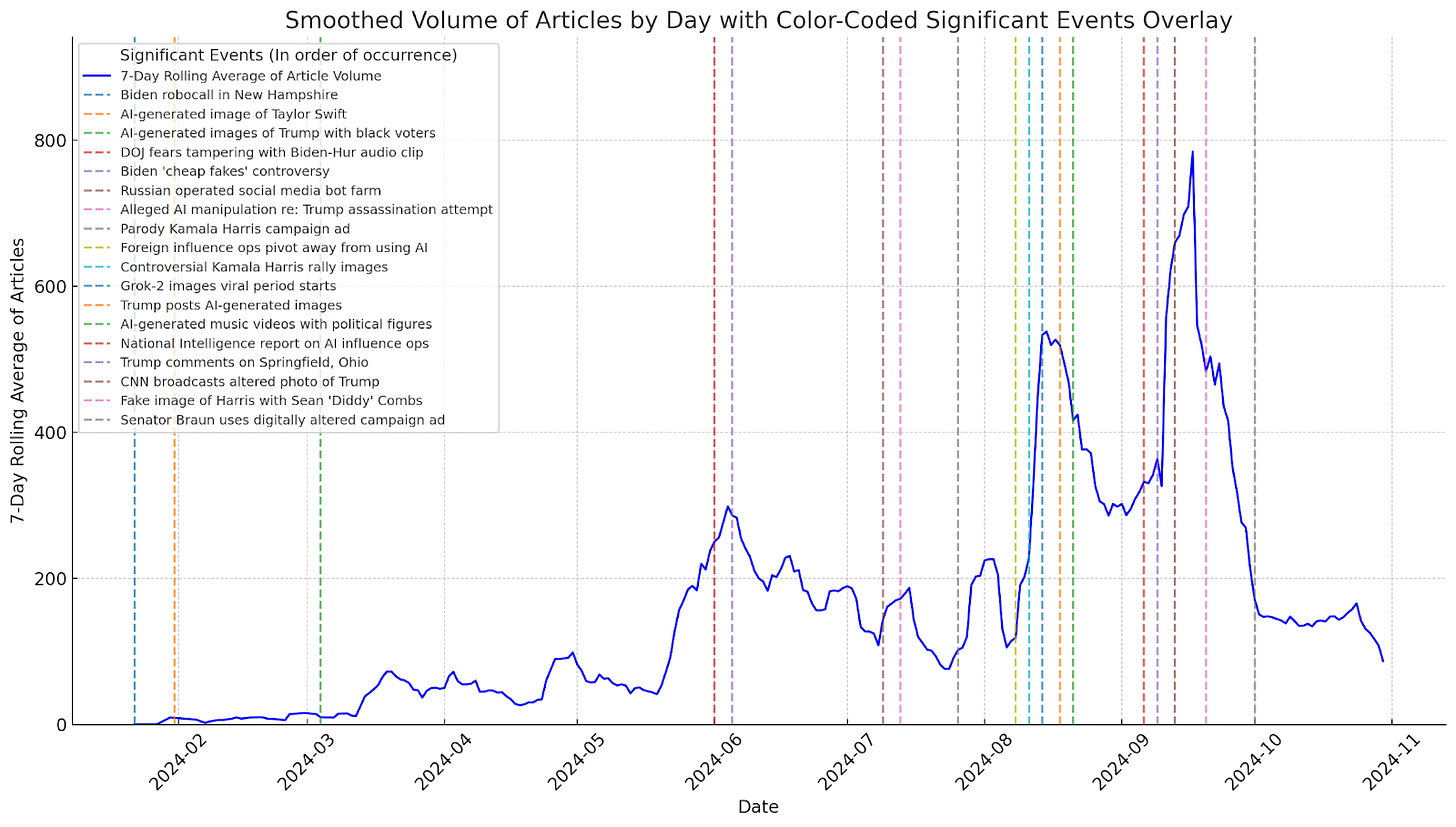

Publication Volume Over Time

Our analysis of publication trends revealed that coverage around AI and elections steadily increased throughout 2024, spiking significantly during politically intense periods:

Publication Peaks in August and September: The highest volume of articles occurred in September (12,710 articles) and August (10,396 articles). This period correlates with major political shifts including high-profile debates and conversations around AI and political speech. Those two spikes coincide with the provocative release of AI tools like Grok-2 and controversies involving AI-manipulated content in campaign ads. These spikes suggest that media attention closely followed the unfolding political landscape and events where AI featured prominently.

Steady Growth Across the Year: From January through May, there was a steady rise in publication volume. Coverage of AI and elections began more moderately in the early months but escalated as the election drew near, reflecting growing public interest and concern over AI’s role in the electoral process. A high-volume period spanning June to September maintained consistent, elevated coverage, marking this a time when AI was most scrutinized for its potential to influence political narratives.

These findings illustrate how AI's role in the election garnered progressively more media attention, particularly during periods of heightened political activity. This aligns with our qualitative analysis that identified significant events and incidents reported by various media outlets in the summer of 2024.

The volume of articles between February and May 2024 is significantly less than the total volume of articles after June 2024. Although our data is representative of media coverage during this time, it’s important to note that we added the names of the Vice President picks, Vance and Walz in July. This inclusion could have slightly broadened the scope of our data collection.

Top Sources and Their Influence

Our data analysis highlighted the diversity and influence of platforms contributing to the AI and election conversation:

High-Impact Platforms: Archive.org, Google.com, and Medium led in reach and publication volume, with archive.org alone contributing a substantial number of articles. These platforms’ extensive reach, according to Mention's metrics, likely reflects the potential audience size of the entire website, known as domain reach. Mention combines external data from the top 10 million websites and uses an internal model to estimate how many visitors these sites attract. This approach explains why widely used platforms with high visibility, like archive.org, Google.com, and Medium, registered substantial reach in our tracking.

Traditional Media's Role: Outlets like MSN, Fortune.com, and Time.com also had significant reach, even with relatively fewer articles compared to archive.org. This highlights the influence of traditional media in shaping and contextualizing AI narratives for a broad audience.

Specialized Sources for Tech Audiences: Some niche or tech-focused platforms, such as FlipBoard - computer science, a subgroup on a social magazine , suggesting tech-savvy readers have a concentrated interest in AI’s implications for elections. This presence among high-reach sources reflects that discussions around AI were not limited to generalist platforms but resonated with specific, engaged audiences.

This diversity shows that both mainstream and tech-focused audiences were highly engaged, with platforms like archive.org and Google acting as key amplifiers of AI-related content, making these topics accessible and visible across different reader segments.

Incident-Specific Coverage Over Time

In tracking media coverage of specific AI-related incidents during the 2024 election cycle, we wanted to capture the volume of articles that genuinely discussed each incident. To achieve this, we applied a keyword-based filtering approach designed to isolate articles directly relevant to each incident.

For each incident, we identified unique keywords and phrases closely associated with the event. For instance, the "Biden Robocall Incident" was filtered using terms like "Biden," "robocall," and "New Hampshire." This method ensured that articles included in the count explicitly referenced details or themes specific to the incident. Using these targeted keywords, we filtered titles of articles in our dataset, capturing those that directly discussed each incident in a case-insensitive search. The result is a more accurate reflection of media coverage tied specifically to these incidents, avoiding incidental mentions or unrelated articles published around the same time.

Results

Kamala Harris Rally AI Accusation: Received 19,097 articles. Keywords: “Kamala Harris,” “AI,” “crowd,” “rally,” and “Detroit.”

Foreign Influence Pivot from AI: This topic, focusing on shifts in foreign influence strategies, generated 17,196 articles. Keywords: “foreign influence,” “pivot,” and “AI.”

National Intelligence Report on Foreign Influence: Discussing AI in foreign influence operations, this report resulted in 17,182 articles. Keywords: “National Intelligence,” “foreign influence,” and “AI.”

Senator Braun’s AI Campaign Ad: Coverage surrounding AI in campaign advertising yielded 17,161 articles. Keywords: “Senator Braun,” “AI,” and “campaign ad.”

Trump AI-Generated Images: This incident saw 7,405 articles. Keywords: “Trump,” “AI-generated,” and “image.”

AI Images of Trump with Black Voters: Generated 7,002 articles. Keywords: “AI-generated,” “Trump,” and “black voters.”

CNN Airing Altered Trump Image: Attracted 6,953 articles. Keywords: “CNN,” “altered,” “Trump,” and “image.”

Trump Comments on Springfield Ohio: Trump’s comments generated 6,234 articles. Keywords: “Trump,” “Springfield,” “Ohio,” and “comments.”

Trump Assassination Attempt AI Manipulation: Resulted in 6,164 articles. Keywords: “Trump,” “assassination attempt,” and “AI manipulation.”

Taylor Swift "Trump Won" Flag: This AI-generated image incident yielded 3,271 articles. Keywords: “Taylor Swift,” “Trump Won,” and “flag.”

Fake Image of Harris and Sean “Diddy” Combs: Resulted in 2,367 articles. Keywords: “Kamala Harris,” “Sean Combs,” “Diddy,” and “fake image.”

Parody Kamala Harris Campaign Ad: Attracted 2,268 articles. Keywords: “Kamala Harris,” “parody,” and “campaign ad.”

Biden Robocall Incident: This incident generated 2,243 articles. Keywords: “Biden,” “robocall,” and “New Hampshire.”

Biden “Cheap Fakes”: This discussion on manipulated media garnered 2,174 articles. Keywords: “Biden,” “cheap fake,” “D-Day,” and “AI legislation.”

DOJ Fears Over Biden Audio Tampering: Received 2,094 articles. Keywords: “DOJ,” “Biden,” and “audio tampering.”

DeSantis Campaign AI Video: Generated 1,943 articles. Keywords: “DeSantis,” “AI-generated,” “Fauci,” and “video.”

Russian Bot Farm Disruption: Resulted in 1,931 articles. Keywords: “Russian,” “bot farm,” and “social media.”

Grok-2 Launch and Viral Images: Yielded 1,423 articles. Keywords: “Grok-2,” “viral images,” “xAI,” and “Elon Musk.”

AI-Generated Music Videos with Politicians: Produced 1,306 articles. Keywords: “AI-generated,” “music video,” and “politicians.”

The results from our data analysis shed light on key themes that the media prioritized when covering AI-related incidents throughout the 2024 election cycle. Specifically, the analysis shows that the most widely covered incidents involved the use or alleged misuse of AI in political imagery. Events like the Kamala Harris rally accusation, Senator Braun’s campaign ad, and AI-generated images of Trump were among those that garnered the highest media attention. This pattern suggests that the media was particularly sensitive to how AI could influence public perceptions of political figures and campaign narratives.

Additionally, incidents connected to foreign influence, such as the National Intelligence report on AI in disinformation campaigns and evolving foreign strategies to use AI, also received extensive coverage. This emphasis on foreign influence points to the media’s—and public’s—heightened awareness of election integrity and the role that AI could play in international influence operations.

Cultural figures such as Taylor Swift, Donald Trump, and Kamala Harris also featured prominently in AI-related narratives, especially when AI-generated or manipulated content depicted these figures in controversial or fabricated scenarios. Stories such as the “Trump Won” flag with Taylor Swift, altered Trump images, and manipulated photos of Harris show that AI-driven content intersected with celebrity, political, and internet culture, drawing significant public interest. This pattern highlights a dual media priority: not only tracking AI’s role in political influence but also examining its broader cultural impact.

In aggregate, the media covered incidents that aligned with themes of misinformation, election integrity, foreign influence, and popular culture personalities. The news volume and topics indicate that the coverage of AI’s role in the 2024 election cycle reflected larger, overall anxieties about the election and specifically about misinformation. Coverage of incidents involving AI-generated or manipulated content—whether in political ads, imagery of notable figures, foreign influence campaigns— attempted to cement the connection between generative AI and misinformation.

Conclusion

Our comprehensive review of the 2024 U.S. election cycle reveals a nuanced interaction between generative AI, media coverage, and public engagement. The initial concerns raised by scholars and commentators about AI's transformative, potentially destabilizing effects on democracy did not materialize. Generative AI did not create a deluge of misinformation. Although AI-related incidents did play a role in the election narrative—through incidents involving fake images, deepfakes, and misinformation campaigns—our findings show that these instances were always met with significant scrutiny from the media, fact checking institutions, and social media users. The American electorate and media demonstrated resilience and critical engagement, helping to mitigate the potential disruptive effects of AI-generated content.

Invitation to Collaborate

This concludes our AI and the U.S. election project. However, information and analysis of this year’s election will doubtless continue by researchers around the world. In this short summary we were not able to discuss in full all angles of this issue and data. Therefore, we welcome feedback on our analysis and gladly encourage the use of our dataset, all of which is located here.

Well done! That was a super involved project and it was cool to watch it all unfold throughout the year.